A large language model has to process these multiple languages seamlessly, and coding an AI model capable of understanding most of them, if not all, remains complicated.

TRAINING AI ON LOCAL LANGUAGES

One challenge faced by BharatGen, a consortium funded by India’s government, in training their large language model is a lack of online content in Indian languages.

The consortium said that while roughly half of all the data available on the internet is in English, Indian languages make up barely 1 per cent.

Literary works in many Indian languages have never been digitised, while a raft of cultural and traditional information has been verbally passed down for generations without being stored online.

On a more positive note, experts said that the diversity of languages and data collected from local sources could help create AI models with fewer biases.

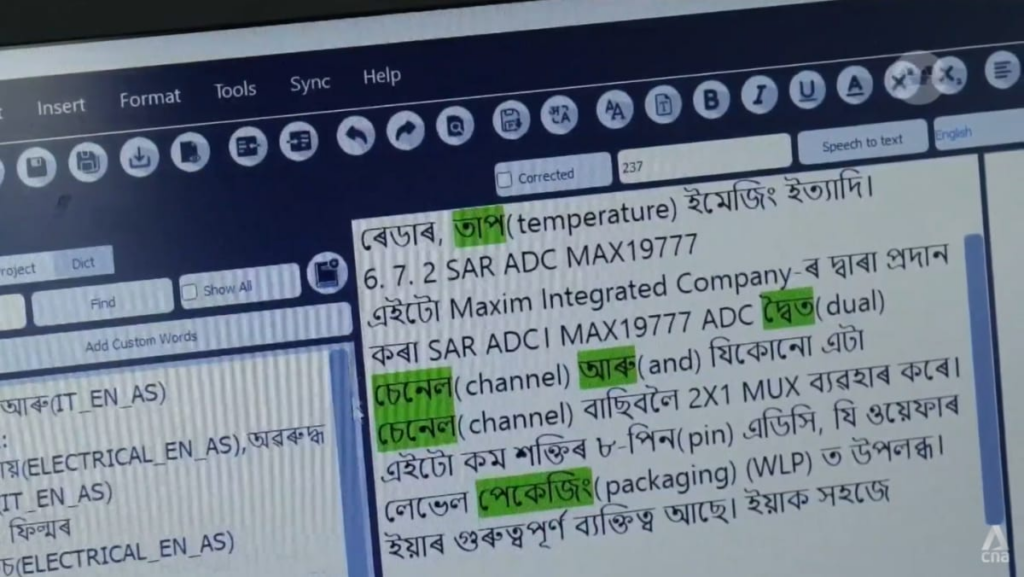

Ganesh Ramakrishnan, a professor at the Indian Institute of Technology Bombay, told CNA his work involved reaching out to magazines, data sources, foundations and non-governmental organisations who have been gathering data in their local languages.

“(We have been) making it possible to digitise and digitalise and reflect that in the foundational model … so this is a big opportunity,” said Ramakrishnan, who is part of the BharatGen consortium.

EXISTING CHATBOTS ARE INADEQUATE

Some small business owners, who have tried using AI as part of their operations, said they have faced language challenges when using existing chatbots.

Ghooran Yadav, a food cart owner in New Delhi, said that he used ChatGPT to enquire about the recipe of the food he sells, but received an underwhelming response.

The app understood his question in the local dialect of Bhojpuri but replied in Hindi.